ChatGPT is one of the shiniest new AI-powered tools, but the algorithms working in the background have actually been powering a whole range of apps and services since 2020. So to understand how ChatGPT works, we need to start by talking about the underlying language engine that powers it.

The GPT in ChatGPT is mostly GPT-3, or the Generative Pre-trained Transformer 3, though GPT-4 is now available for ChatGPT Plus subscribers—and will probably become more widespread soon. The GPT models were developed by OpenAI (the company behind ChatGPT and the image generator DALL·E 2), but they power everything from Bing's AI features to writing tools like Jasper and Copy.ai. In fact, most of the AI text generators available at the moment use GPT-3, and will likely offer GPT-4 as a next step.

ChatGPT brought GPT-3 into the limelight because it made the process of interacting with an AI text generator simple and—most importantly—free to everyone. Plus, it's a chatbot, and people have loved a good chatbot since SmarterChild.

While GPT-3 and GPT-4 are the most popular Large Language Models (LLMs) right now, over the next few years, there's likely to be a lot more competition. Google, for example, has Bard —its AI chatbot—which is powered by its own language engine Pathways Language Model (PaLM 2). But for now, OpenAI's offering is the de facto industry standard. It's just the easiest tool for people to get their hands on.

So the answer to "how does ChatGPT work?" is basically: GPT-3 and GPT-4. But let's dig a little deeper.

What is ChatGPT?

ChatGPT is an app built by OpenAI. Using the GPT language models, it can answer your questions, write copy, draft emails, hold a conversation, explain code in different programming languages, translate natural language to code, and more—or at least try to—all based on the natural language prompts you feed it. It's a chatbot, but a really, really good one.

While it's cool to play around with if, say, you want to write a Shakespearean sonnet about your pet or get a few ideas for subject lines for some marketing emails, it's also good for OpenAI. It's a way to get a lot of data from real users and serves as a fancy demo for the power of GPT, which could otherwise feel a little fuzzy unless you were deep into machine learning.

Right now, ChatGPT offers two GPT models. The default, GPT-3.5, is less powerful but available to everyone for free. The more advanced GPT-4 is limited to ChatGPT Plus subscribers, and even they only get a limited number of questions every day.

One of ChatGPT's big features is that it can remember the conversation you're having with it. This means it can glean context from whatever you've asked it previously and then use that to inform its conversation with you. You're also able to ask for reworks and corrections, and it will refer back to whatever you'd been discussing before. It makes interacting with the AI feel like a genuine back-and-forth.

If you want to really get a feel for it, go and spend five minutes playing with ChatGPT now (it's free!), and then come back to read about how it works.

How does ChatGPT work?

This humongous dataset was used to form a deep learning neural network [...] modeled after the human brain—which allowed ChatGPT to learn patterns and relationships in the text data [...] predicting what text should come next in any given sentence.

ChatGPT works by attempting to understand your prompt and then spitting out strings of words that it predicts will best answer your question, based on the data it was trained on.

Let's actually talk about that training. It's a process where the nascent AI is given some ground rules, and then it's either put in situations or given loads of data to work through in order to develop its own algorithms.

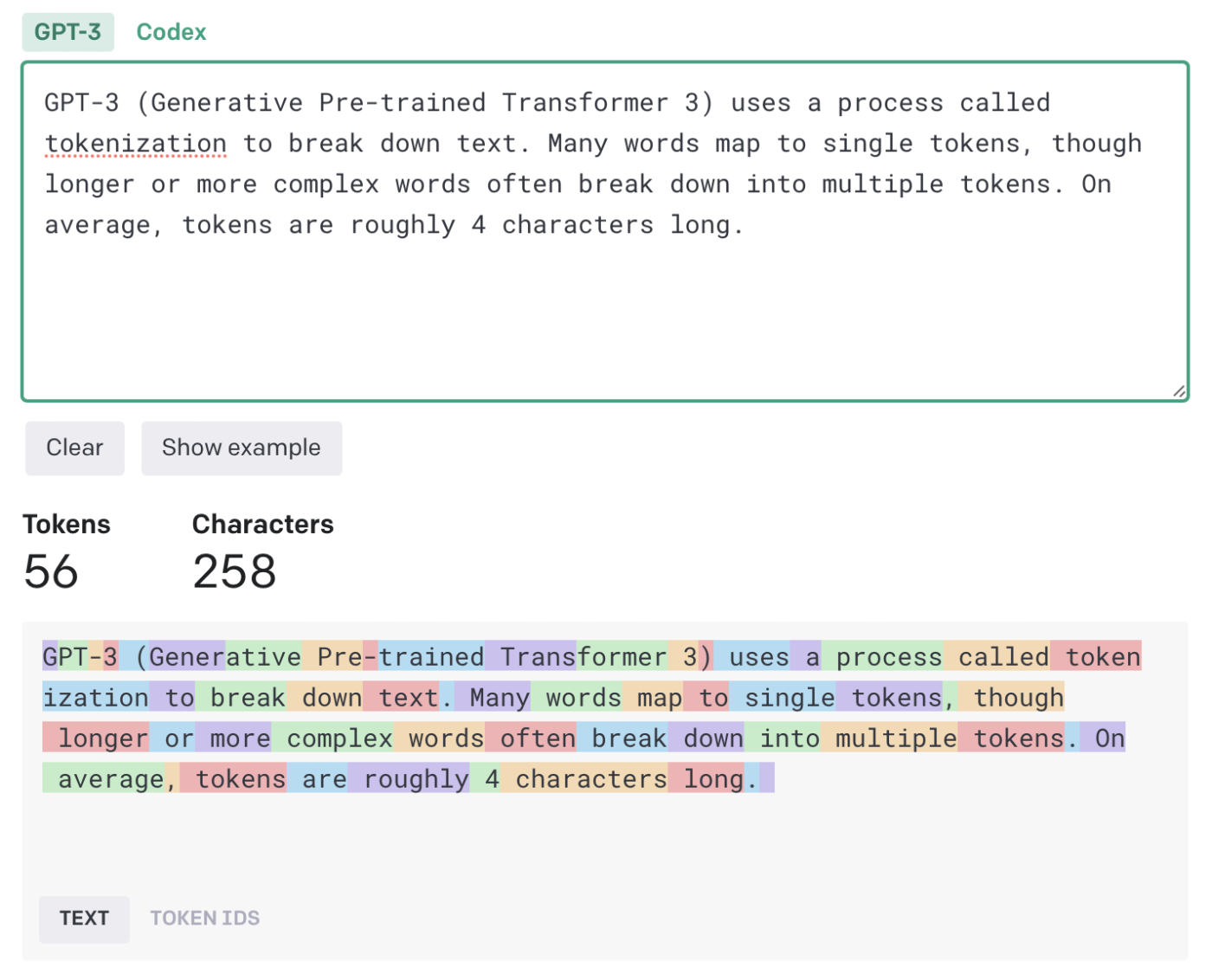

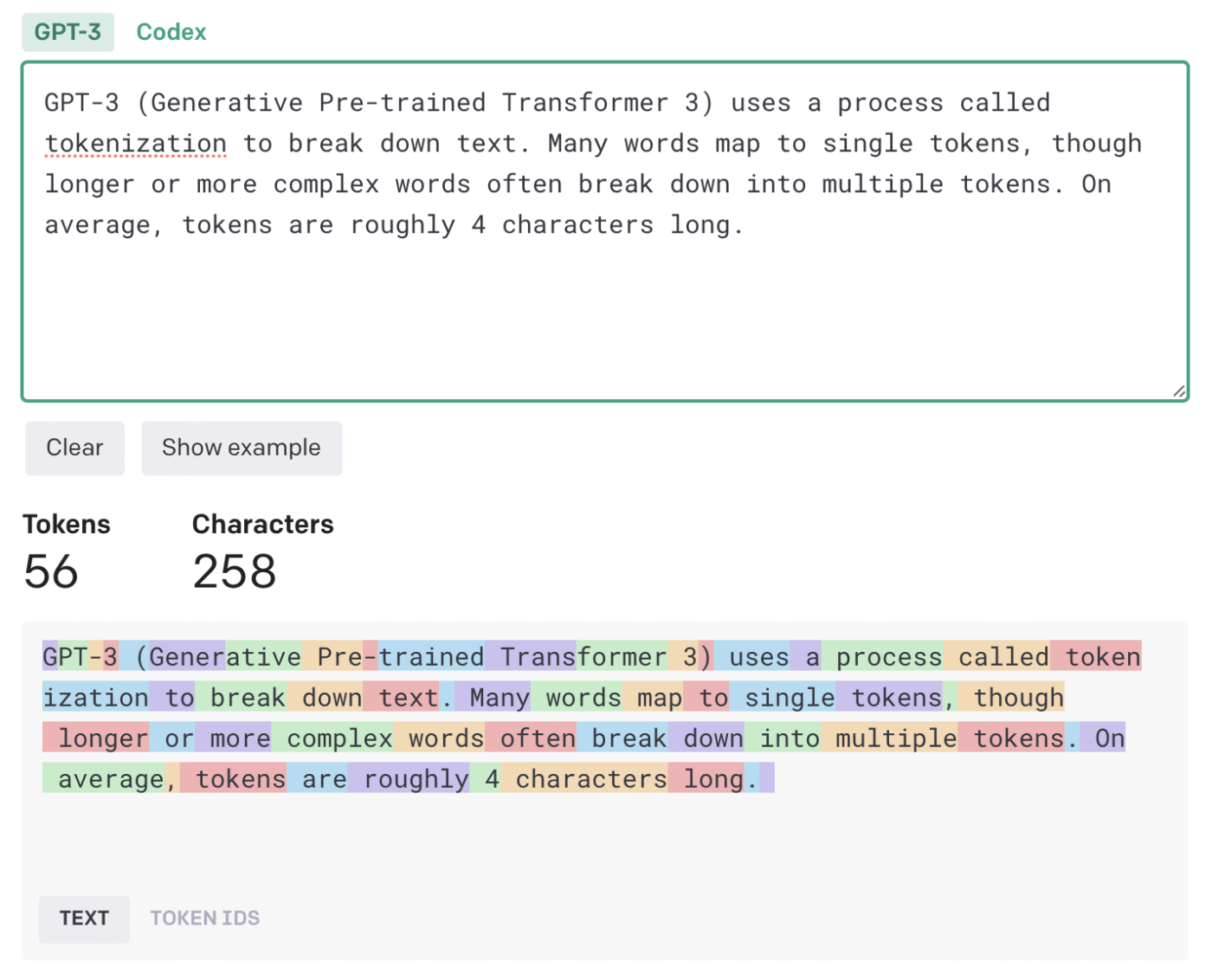

GPT-3 was trained on roughly 500 billion "tokens," which allow its language models to more easily assign meaning and predict plausible follow-on text. Many words map to single tokens, though longer or more complex words often break down into multiple tokens. On average, tokens are roughly four characters long.OpenAI has stayed quiet about the inner workings of GPT-4, but we can safely assume it was trained on much the same dataset since it's even more powerful.

All the tokens came from a massive corpus of data written by humans. That includes books, articles, and other documents across all different topics, styles, and genres—and an unbelievable amount of content scraped from the open internet. Basically, it was allowed to crunch through the sum total of human knowledge.

This humongous dataset was used to form a deep learning neural network—a complex, many-layered, weighted algorithm modeled after the human brain—which allowed ChatGPT to learn patterns and relationships in the text data and tap into the ability to create human-like responses by predicting what text should come next in any given sentence.

Though really, that massively undersells things. ChatGPT doesn't work on a sentence level—instead, it's generating text of what words, sentences, and even paragraphs or stanzas could follow. It's not the predictive text on your phone bluntly guessing the next word; it's attempting to create fully coherent responses to any prompt.

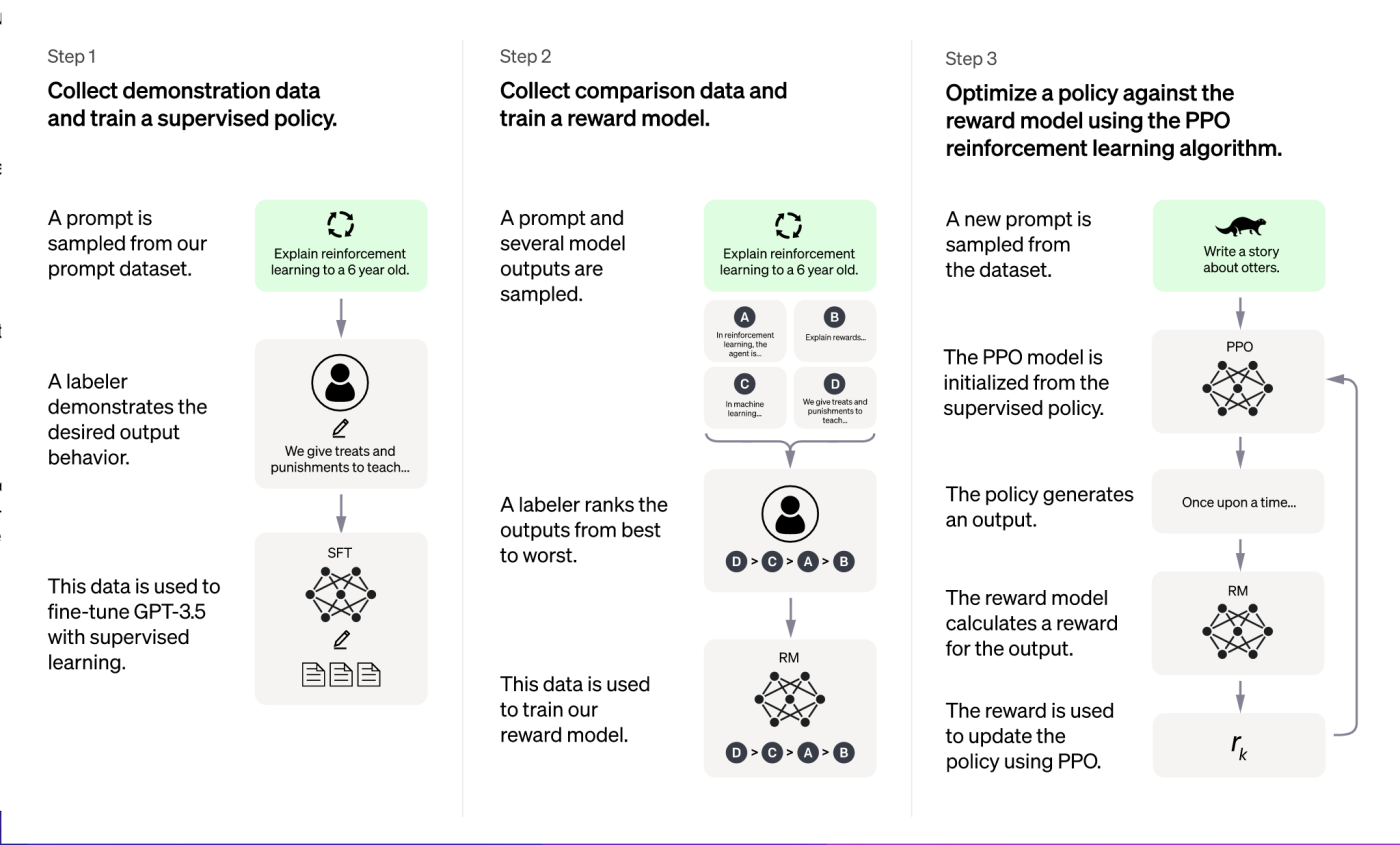

To further refine ChatGPT's ability to respond to a variety of different prompts, it was optimized for dialogue with a technique called reinforcement learning with human feedback (RLHF). Essentially, humans created a reward model with comparison data (where two or more model responses were ranked by AI trainers), so the AI could learn which was the best response.

Back to the neural network it formed. Based on all that training, GPT-3's neural network has 175 billion parameters or variables that allow it to take an input—your prompt—and then, based on the values and weightings it gives to the different parameters (and a small amount of randomness), outputs whatever it thinks best matches your request. OpenAI hasn't said how many parameters GPT-4 has, but it's a safe guess that it's more than 175 billion and less than the once-rumored 100 trillion parameters. Regardless of the exact number, more parameters doesn't automatically mean better. Some of GPT-4's increased power probably comes from having more parameters than GPT-3, but a lot is probably down to improvements in how it was trained.

In the end, the simplest way to imagine it is like one of those "finish the sentence" games you played as a kid.

In the end, the simplest way to imagine it is like one of those "finish the sentence" games you played as a kid. For example, when I gave ChatGPT using GPT-3 the prompt, "Zapier is…" it responded saying:

"Zapier is a web-based automation tool that allows users to connect different web applications together in order to automate repetitive tasks and improve workflows."

That's the kind of sentence you can find in hundreds of articles describing what Zapier does, so it makes sense that it's the kind of thing that it spits out here. But when my editor gave it the same prompt, it said:

"Zapier is a web-based automation tool that allows users to connect different web applications and automate workflows between them."

That's pretty similar, but it isn't exactly the same response. That randomness (which you can control in some GPT-3 apps with a setting called "temperature") ensures that ChatGPT isn't just responding to every single response with what amounts to a stock answer. It's running each prompt through the entire neural network each time, and rolling a couple of dice here and there to keep things fresh. It's not likely to start claiming that Zapier is a color from Mars, but it will mix up the following words based on their relative likelihoods.

(For what it's worth, when running on GPT-4, ChatGPT said: "Zapier is a web-based automation tool that allows users to integrate and automate tasks between various online applications and services." Much the same!)

Spitting out words, not knowledge

The makers of ChatGPT are the first to say that it can produce incorrect (and possibly harmful) information, though they are working hard to fix it.

Here's a tame example. When I asked ChatGPT using GPT-3 and GPT-4 to reply to "Harry Guinness is…" GPT-3 first replied by asking which Harry Guinness. Great question—there are literally two of us! So I said, "Harry Guinness the writer" (that's me). GPT-4 guessed who I was straight away, though both responses were fascinating:

GPT-3: Harry Guinness is a freelance writer and journalist based in Ireland. He has written for a variety of publications, including The New York Times, The Guardian, The Huffington Post, and Popular Mechanics. He covers topics ranging from technology and photography to travel and culture.

GPT-4: Harry Guinness is an Irish writer, photographer, and technology expert. He has written for various publications, including How-To Geek, Lifehacker, and Tuts+. His work primarily focuses on technology, tutorials, and tips, as well as photography and image editing. Guinness has built a reputation for creating detailed guides that help readers understand complex subjects or solve problems in a straightforward manner.

GPT-3's first and last lines are pulled almost verbatim from my various websites and author bios around the web (although I normally list myself as a freelance writer and photographer, not a journalist). But the list of publications is basically made up. I've written for The New York Times, but not for The Guardian, The Huffington Post, or Popular Mechanics (I do write regularly for Popular Science, so that might be where that came from).

GPT-4 gets the photographer part right and actually lists some publications I've written for, which is impressive, though they're not the ones I'd be most proud of. It's a great example of how OpenAI has been able to increase the accuracy of GPT-4 relative to GPT-3, though it might not always offer the most correct answer.

But let's go back to GPT-3 as its error provides an interesting example of what's going on behind the scenes in ChatGPT. It doesn't actually know anything about me. It's not even copy/pasting from the internet and trusting the source of the information. Instead, it's simply predicting a string of words that will come next based on the billions of data points it has.

For example: The New York Times is grouped far more often with The Guardian and The Huffington Post than it is with the places I've written for, like Wired, Outside, The Irish Times, and, of course, Zapier. So when it has to work out what should follow on from The New York Times, it doesn't pull from the published information about me; it pulls that list of large publications from all the training data it has. It's very clever and looks plausible, but it isn't true.

GPT-4 does a much better job and nails the publications, but the rest of what it says really just feels like plausible follow-on sentences. I don't think it has any great appreciation for my reputation: it's just saying the kind of thing a bio says. It's far better at hiding how it works than GPT-3, though it's actually using much the same technique.

Still, it's very impressive how much GPT has already improved. For now, GPT-4 is locked behind a premium subscription, so most ChatGPT content you see will rely on GPT-3, but that may change over the next while. Who knows what GPT-5 will bring.

What is the ChatGPT API?

OpenAI doesn't have a just-us attitude with its technology. The company has an API platform that allows developers to integrate the power of ChatGPT into their own apps and services (for a price, of course).

Zapier uses the ChatGPT API to power its own ChatGPT integration, which lets you connect ChatGPT to thousands of other apps and add AI to your business-critical workflows. Here are some examples to get you started, but you can trigger ChatGPT from basically any app.

You can also make use of OpenAI's other models—like DALL·E and Whisper—with Zapier's OpenAI integration. Automate workflows that involve image generation and audio transcription, straight from the apps you're already using.

Related reading: How you can (and when you shouldn't) use ChatGPT to write marketing copy